I’m taking at my pace the free online course “Foundations of humane technology” by the Center for Humane Technology (whom I had heard of after watching the excellent documentary “The Social Dilemma”.) These are my notes.

- Module 1 – setting the stage

- Module 2 – respecting human nature

- Module 3 – minimizing harmful consequences

- Module 4 – centering values

- Module 5 – creating shared understanding

- Module 6 – supporting fairness & justice

- Module 7 – helping people thrive

- Module 8 – ready to act

“Humanity’s biggest problems require a collective intelligence that’s smarter than any of us individually, but we can’t do that if our tools are systematically downgrading us.”

Center for Humane Technology

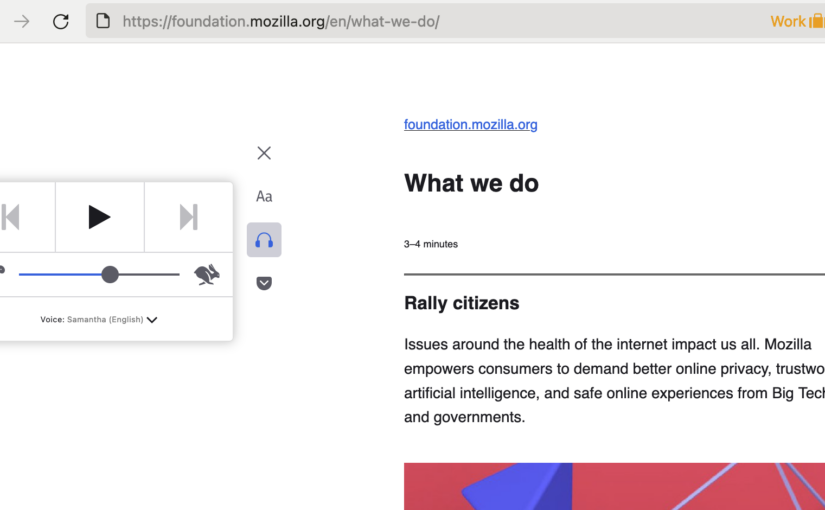

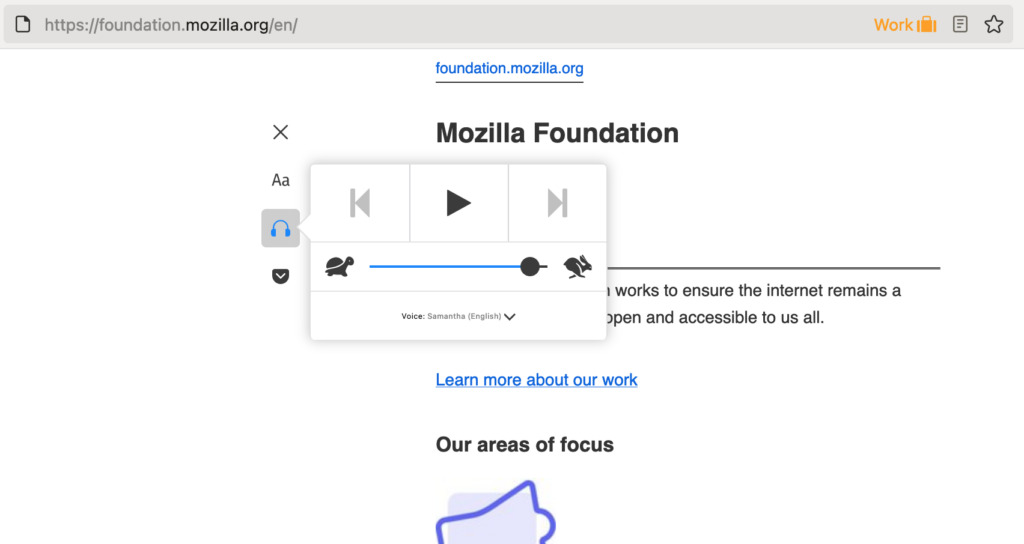

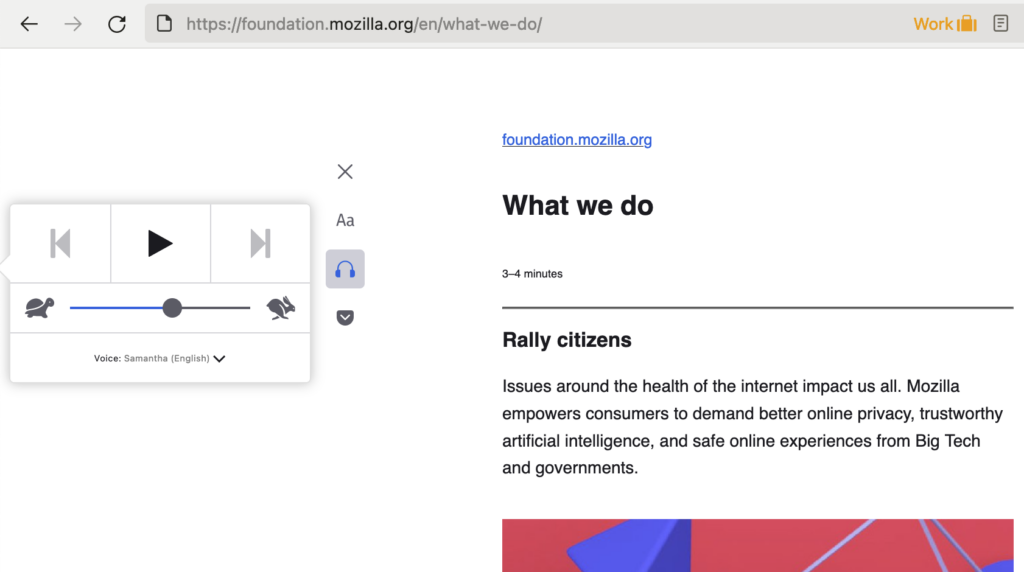

A shared understanding helps with making sense and processing noisy information to inform better choices. For example, it’s one’s ability to asses the trustworthiness of claims, or catalogue the values held by a community, or find common ground across multiple view points. It can be achieved through responsible journalism, scientific method, non violent communication.

This module focuses on social media in particular because it warps reality and thus ends our capacity for shared understanding and effective collaboration.

Beware the common social media distortions

These distortions or side effects of social media or information technologies are particular manipulation artifacts that people are more or less aware of, which contribute to altering the way people grasp concepts or understand reality.

Engaging content distortion

People’s curated best moments distort these people’s lives and even our views of our own lives, because social media encourages a race to the virality lottery. When you flood the attention landscape with engaging content, everyone else must mimic this to be heard and seen.

Moral outrage distortion

Algorithms optimize for moral outrage where negativity or inflammatory language spreads more quickly than neutral or nuanced or positive emotions. This affects television and news which must keep up to stay relevant. As a result, negative caricatures are disproportionately represented and it feels like there is more outrage than there actually is. Public discourse dominated by artificial moral outrage makes progress impossible.

“Extreme emotion” distortion

This is leveraging subconscious trauma or fears by feeding people content they can hardly look away from.

Amplification distortion

This is when social media trains to, and rewards users who present more extreme views, which then get amplified.

Microtargeting distortion

This is the ability to deliver a specific resonant message to one group while delivering the opposite message to another. If these groups don’t overlap much, the contradictory messaging becomes invisible and social conflict can be orchestrated.

“Othering” distortion

This relates to the “fundamental attribution error” cognitive bias where we are more likely to attribute our own errors to our environment or life circumstances, and others’ to their personality. Social media provides ample evidence (which can and usually us strung together by algorithms) of the bad behaviour of particular groups of “others”.

Disloyalty distortion

More than a distortion, it’s really a side effect whereby you may be attacked by members of your own groups when you express compassion or try to understand another group.

Information flooding distortion

For example, fake accounts and bots that make topics trend and thus influence what people are exposed to (hence altering their reality) can be very easily engineered.

Weak-journalism distortion

More than a distortion, it’s a side effect due to the tension between substance and attention-grabbing headlights, forcing news organisations to invest less in depth and nuance.

🤔 Personal reflection: Distortions

Think of your work or consider technology products you regularly use in your personal or professional life. Where might they have contributed or amplified any of the distortions? What design features can increase or combat distortion in our shared understanding?

I have fallen more or less strongly for most of the distortions from having been an early adopter of the mainstream social media platforms. After about 15 years of being subjected to the manipulations I had a late but exponential sense of awareness which spurred me to reclaim my mental health, social and societal sanity. I consider myself in recovery and find it more useful to protect myself first and then the people in my immediate circles, by letting or not the outside signal and noise creep on my radar and approaching information with care. I believe that at-scale three-ponged methods with education on bias, design choices that optimise for people’s benefit, and corporate communication may contribute to reducing distortions and improving shared understanding.

Rebuilding shared understanding

“Democracy can not survive when the primary information source that its citizen use is based on an operating model that customizes individual realities and rewards the most viral content.”

Center for Humane Technology

How can design choices steward trust and mutual understanding? How to get to a healthier information ecosystem?

Fight the race for human attention

- Helpful friction (such as sharing limits, prompting to read before sharing, or to revise language when harmful language is detected) can have notable results. According to Twitter, 34% of the people who were prompted to revise language did, or refrained from posting.

- Optimize algorithms to create more “small peak” winners instead of a small number of “giant peak” ones.

- Apply to online spaces teachings from the physical spaces and how its regulation works (such as blocking political ads, or limiting their targeting precision, in time of elections.)

- Build empathy by surfacing people’s backgrounds and conditions. Encourage curiosity, not judgement or hate.

- Invite one-on-one conversations over public broadcast.

- Provide avenues for de-escalation of online disagreement.

Allow for addressing crises

- Assume that crises will happen and plan for rapid response.

- Seek to identify negative externalities among non-users to grow the ability to make design choices that contribute to a more resilient social fabric.

- Maintain cross-team collaboration so that no bad design decisions are made in isolation. (e.g., are the teams which may be at the origin of harmful features enough in touch with those that mitigate or fix the features?)

- Enable “blackouts” where features that may create harms are turned off during critical periods (e.g., leading to an election, turning off recommendations, microtargeting, trends, ads, autocompletion suggestions.)

Heal the years of toxic conditioning and mental habits, recover and re-train

- Call out the harms so that there is mutual recognition. Public educational materials like the documentary “The Social Dilemma” should be distributed.

- Teach/learn to cultivate intellectual humility, explore worldviews, reject the culture of contempt.

- Rehumanize, then de-polarize.

- Design to reach consensus in spite of / in harmony with differences.

- Build smaller places for facilitated conversations where participants don’t compete for the attention of large audiences, but can see and enjoy others’ humanity and rich diversity.

Mind the (perception) gap

A perception gap is the body of false beliefs about another party, and that party’s beliefs. It leads to polarization. For instance, the perception gap leads to the incorrect belief that people hold views that are more extreme than they actually are. The negative side-effect is that people see each others as enemies. Very engaged parties want to win over the others at all costs, while the exhausted majority simply tunes out. Both outcomes are negative for shared understanding (or for democracy in the case of political polarization.)

Bridging the perception gap is a first step to minimize division and toward willingness to find common ground, overcome mistrust and advance to progress.

🤔 Personal reflection: The perception gap

The degree of perception gap matches the degree of dehumanization of the other side: the higher the perception gap the more likely someone was to find the other side bigoted, hateful, and morally disgusting. How might you be able to reach out to someone unlike you and better learn about their perspective on key issues? How might the “perception gap” concept and measurements inform the development of technology that creates shared understanding?

Independent and neutral parties who at critical times like election periods, or social upheaval, shed the nuanced light on the gap that might bridge polarised parties. Or else, a great deal of zen because there is a cost in time and energy, and a personal psychological risk to approaching people who may not see or recognise the good faith of a first step, or be wiling to find common grounds. Surfacing elements of the perception gap to the extent that it is known or can be deduced, and displaying these as helpful indicators, would come a long way to recalibrate one’s perspectives. Proprietary platforms may not always suggest varied sources of information to put forward, but anything that compromises between integrity and profit is a step towards progress.